AI-Powered Language Learning Platform

A comprehensive German language learning ecosystem combining conversational AI practice, structured courses, and intelligent tutoring tools to accelerate fluency through personalized, engaging experiences.

Link to the Service

Overview & Challenge

Traditional language learning suffers from limited conversation practice, generic curriculum, and expensive tutoring. Students struggle to find speaking partners, lose motivation with rigid lesson structures, and receive delayed feedback. Teachers face time-consuming lesson planning, manual progress tracking, and difficulty personalizing instruction at scale.

Verbly.me aimed to bridge these gaps by creating a dual-sided platform: empowering learners with AI conversation partners available 24/7 for any topic while equipping teachers with intelligent automation tools for lesson creation, vocabulary management, and real-time student insights. The challenge was designing experiences that felt natural for both spontaneous AI practice and structured learning paths, building trust in AI feedback accuracy, and creating workflows that genuinely saved teachers time rather than adding complexity.

My Role & Impact

As Senior Product Designer I led the end-to-end UX/UI design from discovery through launch and post-release iterations.

Duration: January 2025 – September 2025 (9 months)

Team: 2 PMs, 4 Full-stack Engineers, 2 AI/NLP Engineers, 1 Education Consultant, 1 QA

Tools: Figma, FigJam, Maze, UserTesting, Mixpanel, Jira, Confluence

Research & Discovery

Research Scope & Methodology

56

In-depth interviews (learners & teachers)

240+

Survey responses across 18 countries

12

Language learning platforms analyzed

8

Usability testing rounds

Phase 1: User Research (Weeks 1-4)

- Conducted 56 interviews: 34 language learners (A1-C1 levels of German Language) and 22 language teachers (independent tutors and language school instructors)

- Deployed bilingual surveys: 240+ responses identifying key pain points, learning preferences, technology comfort, and willingness-to-pay

- Created 6 user personas: 4 learner types (casual explorer, exam-focused student, business professional, heritage speaker) and 2 teacher types (independent tutor, institutional instructor)

- Mapped 5 user journey maps: Covering solo practice, tutor-guided learning, exam preparation, vocabulary acquisition, and speaking confidence building

Key Research Insights

Learner Personas

Age: 22-35

Level: A1-A2

Goal: Learn for travel/hobby

Key Traits:

- Practices irregularly (2-3x/week)

- Motivated by fun, not pressure

- Loves gamification

- Short attention span

Pain Point: Loses motivation quickly; needs engaging content

Age: 18-28

Level: B1-C1

Goal: Pass Certificate Exam

Key Traits:

- Highly disciplined

- Practices daily (30-60 min)

- Wants measurable progress

- Time-pressured (exam deadline)

Pain Point: Needs structured curriculum; fears wasting time on irrelevant content

Age: 28-45

Level: B1-B2

Goal: Career advancement

Key Traits:

- Very limited time

- Wants practical vocabulary

- Needs flexible scheduling

- ROI-focused mindset

Pain Point: Can't commit to fixed schedules; needs business-specific content

Age: 25-40

Level: A2-B2 (uneven)

Goal: Connect with roots

Key Traits:

- Strong listening, weak writing

- Emotional connection to language

- Embarrassed by gaps

- Perfectionist tendencies

Pain Point: Standard courses too basic or too advanced; needs personalized approach

Teacher Personas

Age: 28-50

Students: 10-30 individual learners

Teaching Style: Personalized, flexible

Key Traits:

- Works from home/coffee shops

- Manages own schedule & pricing

- Wears many hats (teacher, marketer, admin)

- Tech-savvy but overwhelmed by tools

- Income dependent on student retention

Pain Point: Spends 60% of time on admin (scheduling, materials, invoicing) vs. teaching; needs automation to scale income

Age: 30-55

Students: 80-150 in multiple classes

Teaching Style: Curriculum-based, structured

Key Traits:

- Works for language school/university

- Must follow institutional curriculum

- Teaches multiple classes simultaneously

- Limited by school's approved tools

- Focused on measurable outcomes

Pain Point: Can't personalize for 100+ students; manual grading and progress tracking unsustainable at scale

Phase 2: Competitive Analysis

Analyzed 22 platforms including Duolingo, Babbel, italki, Preply, Rosetta Stone, Busuu, HelloTalk, Tandem, and emerging AI tutors. Evaluated:

- Conversation practice mechanisms and AI implementation quality

- Course structure, content delivery, and personalization approaches

- Teacher tools, marketplace models, and scheduling systems

- Gamification strategies and retention mechanics

- Feedback quality, correction methods, and progress visualization

Key finding: Market bifurcated between "app-based learning" (gamified but limited conversation) and "marketplace tutoring" (personalized but expensive and scheduling-dependent). Opportunity identified for hybrid model combining AI-powered practice with optional human guidance.

Competitive Landscape Matrix: Personalization vs. Conversation Practice Quality

| Platform | Type | Personalization | Conversation Practice | Key Strengths | Key Gaps |

|---|---|---|---|---|---|

| Duolingo | App | Medium 6/10 | Low 3/10 | Gamification, streaks, adaptive difficulty | Limited speaking practice, scripted dialogues |

| Babbel | App | Medium 5/10 | Medium 4/10 | Structured curriculum, practical dialogues | Limited free-form conversation, no AI tutor |

| Rosetta Stone | App | Low 3/10 | Medium 5/10 | Immersive method, speech recognition | One-size-fits-all, expensive, dated UX |

| Busuu | App | Medium 6/10 | Medium 5/10 | Community feedback, official certificates | Limited speaking opportunities, slow feedback |

| italki | Marketplace | High 9/10 | High 9/10 | Real human tutors, fully personalized | Expensive ($15-40/hr), scheduling hassles, no structure |

| Preply | Marketplace | High 9/10 | High 9/10 | Vetted tutors, flexible booking | Expensive, quality varies, no self-study tools |

| HelloTalk | Community | High 8/10 | Medium 6/10 | Free, real native speakers, cultural exchange | Inconsistent partners, social awkwardness, no curriculum |

| Tandem | Community | High 7/10 | Medium 6/10 | Language exchange, video chat, corrections | Unreliable partners, time zone issues, no structure |

| Elsa Speak | App | Medium 7/10 | Medium 5/10 | Excellent pronunciation AI, accent training | Pronunciation-only focus, limited conversation |

| Lingoda | Marketplace | Medium 7/10 | High 8/10 | Small group classes, structured curriculum | Very expensive, fixed schedule, less personalized |

| Verbly.me | Hybrid AI | High 9/10 | High 9/10 | Unlimited AI practice + optional human guidance, affordable, 24/7 availability | AI not as nuanced as human (yet), newer platform |

Key Insight: The competitive landscape revealed a clear gap in the market:

- App-based platforms (Duolingo, Babbel) excel at gamification but offer limited real conversation practice

- Marketplace platforms (italki, Preply) provide excellent personalized conversation but are expensive ($15-40/hour) and scheduling-dependent

- Community platforms (HelloTalk, Tandem) offer free practice but lack structure and consistent quality

- Verbly.me's opportunity: Combine the affordability and availability of apps with the personalization and conversation quality of human tutors through AI, while offering optional human teacher integration for those who want it

Design Process & Iterations

Information Architecture

- Conducted card sorting with 28 participants (16 learners, 12 teachers) to validate mental models

- Designed dual IA structures: learner-focused (practice, courses, progress) and teacher-focused (students, lessons, materials)

- Tested 4 navigation patterns with tree testing; final version achieved 89% task success rate

- Established clear module hierarchy reducing navigation depth from 4 levels to 2 for core actions

- Created seamless role-switching for users who are both learners and teachers (31% of user base)

Wireframing & Concept Validation

- Sketched 80+ wireframe variations for key flows: AI chat interface, lesson builder, vocabulary manager, progress dashboard

- Built 12 interactive low-fidelity prototypes in Figma for critical user journeys

- Tested with 24 users across 3 rounds, identifying major friction in: chat correction visibility, lesson template customization, and vocabulary organization

- Iterated 4 times on AI chat interface achieving 94% comprehension of correction feedback in final concept

UI Design System & Pattern Library

- Built comprehensive design system: 62+ components, 12 semantic color tokens, 5 typography scales, 8px grid system

- Established conversation patterns: Message bubbles with inline corrections, expandable grammar explanations, pronunciation playback controls, confidence indicators

- Defined educational UI patterns: Lesson card structures, exercise types (fill-blank, multiple-choice, speaking prompts), progress visualizations, achievement badges

- Created teacher workflow patterns: Drag-drop lesson builders, bulk vocabulary import, student comparison views, automated report generation

- Implemented responsive breakpoints: Mobile-first for learner practice, desktop-optimized for teacher administration

- Developed motion language: 25+ micro-interactions for feedback moments (correction appears, level up, streak milestone, new achievement)

- Documented extensively: 180+ specification screens covering all states, error handling, empty states, loading patterns

UI Solutions & Key Features

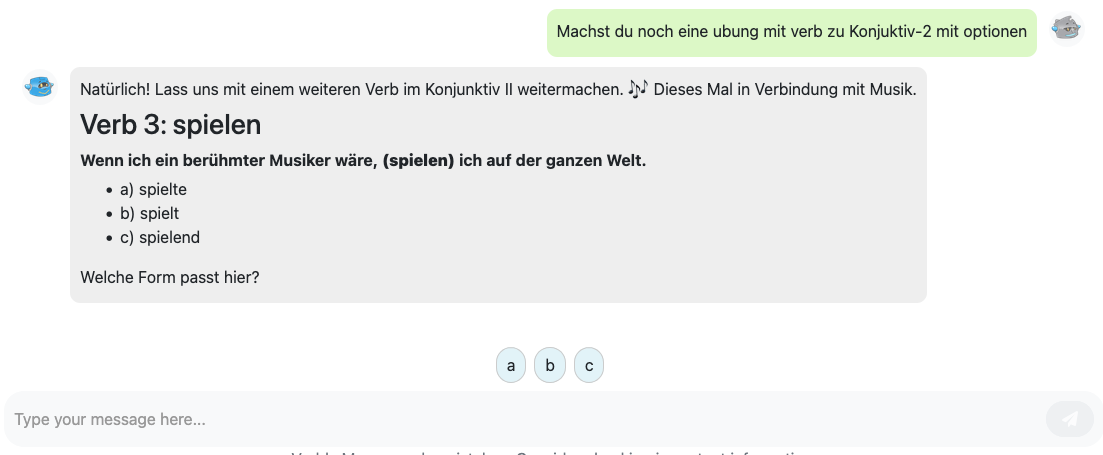

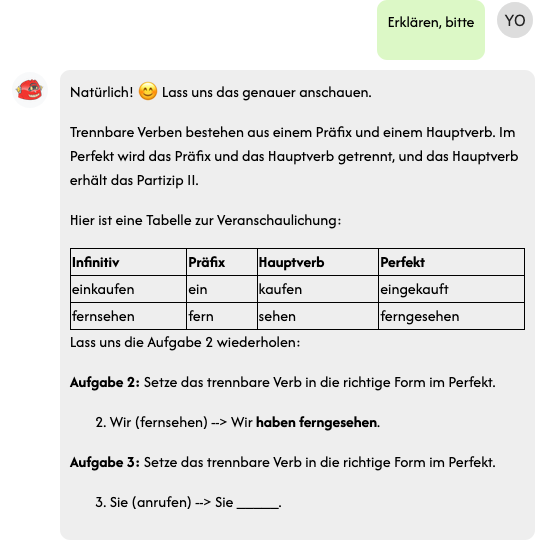

AI Conversation Interface

Designed natural chat experience with real-time grammar/vocabulary corrections appearing inline. Implemented three correction modes: aggressive (every mistake), balanced (major errors), minimal (comprehension-blocking only). Added expandable grammar explanations, translation tooltips, and "challenge correction" option. Users reported 88% satisfaction with correction clarity and timing. Average practice session increased from 8 to 19 minutes.

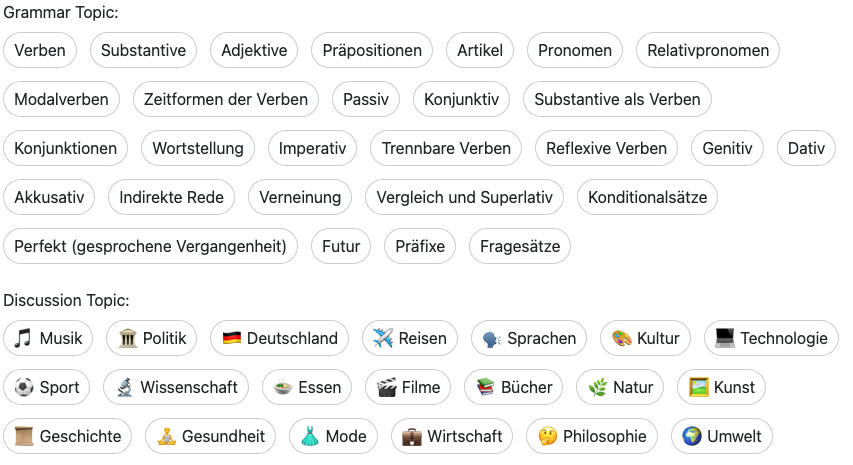

Topic Selection & Conversation Starters

Created browsable topic library with 150+ conversation starters across 12 categories (travel, business, hobbies, culture, etc.) organized by CEFR level. Implemented "surprise me" randomizer and personalized suggestions based on learning history. Added custom topic input for specific vocabulary practice. Topic-guided conversations showed 34% higher completion rate vs. free-form chat.

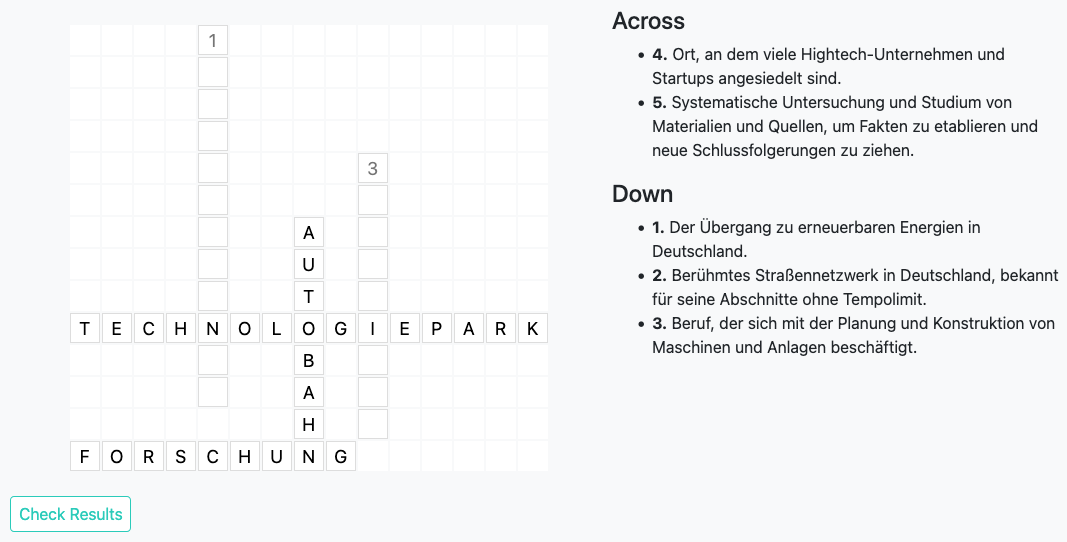

Interactive Learning Games

Designed 6 game types reinforcing vocabulary and grammar: word matching, sentence builder, listening comprehension, pronunciation challenge, translation race, grammar quiz. All games pull from user's recent conversation mistakes and saved vocabulary, creating personalized review. Implemented adaptive difficulty and spaced repetition algorithms. Games drove 156% increase in vocabulary retention (measured via follow-up quizzes).

Structured Course Builder (Teacher Tool)

Created intuitive lesson builder with drag-drop module assembly, pre-built templates, and AI-assisted content generation. Teachers input learning objectives; system suggests exercises, conversation topics, and vocabulary sets. Integrated multimedia support (audio, images, video embeds). Built-in versioning and sharing across teacher network. Reduced lesson creation time from 90 minutes to 26 minutes (71% reduction).

Smart Vocabulary Manager

Designed automated vocabulary collection from AI conversations with one-tap save, context preservation, and example sentence capture. Implemented categorization (folders, tags, CEFR levels), bulk import/export, and flashcard generation. Added spaced repetition scheduler and mastery tracking. Teachers can assign vocabulary sets to students and monitor acquisition. Active vocabulary lists averaged 340 words per user (vs. 80 in manual tracking systems).

Progress Dashboard & Analytics

Created comprehensive progress visualization showing: speaking time, vocabulary acquired, grammar patterns mastered, streak maintenance, CEFR level estimation. For teachers: student comparison views, engagement metrics, common error patterns, intervention triggers. Implemented goal-setting with milestone celebrations. Dashboard engagement correlated with 43% higher retention (users who checked progress weekly stayed 6.2 months vs. 4.3 months).

Pronunciation Feedback System

Designed visual pronunciation feedback using waveform visualization, phoneme highlighting, and native speaker comparison. Implemented recording interface with playback, slow-motion repeat, and targeted practice for difficult sounds. Added AI scoring (0-100) with specific improvement suggestions. Pronunciation scores improved average 38 points over 8-week usage period.

Teacher-Student Collaboration Mode

Designed hybrid model where teachers assign AI practice sessions, review conversation transcripts, leave feedback, and supplement with live tutoring. Built scheduling integration, session notes, and assignment tracking. Created teacher feedback interface overlaying AI corrections with human annotations. 68% of learners used hybrid mode; these users showed 2.4x faster progress than AI-only or tutor-only groups.

Usability Testing & Validation

Testing Methodology

- Conducted 8 rounds of moderated usability testing (10-15 participants each, split learners/teachers)

- Ran unmoderated remote tests via Maze and UserTesting with 200+ participants for quantitative metrics

- Tested across 7 key scenarios: first conversation, correction comprehension, vocabulary saving, game completion, lesson creation, student progress review, pronunciation practice

- Measured: task success rates, time-on-task, error rates, System Usability Scale (SUS), Net Promoter Score (NPS)

- Conducted A/B tests on 5 critical features with 300+ users post-beta launch

Critical Iterations Based on Testing

Solution: Redesigned with three timing options: instant, end-of-message, end-of-conversation. Added visual "correction available" indicator without forcing interruption. Satisfaction increased from 62% to 91%.

Solution: Redesigned with CEFR-adaptive explanations, visual examples, and "explain like I'm 5" option. Added translation to native language toggle. Comprehension jumped to 88%.

Solution: Added "guided mode" wizard with smart defaults and template library. Kept "advanced mode" for experienced teachers. Completion rate rose from 44% to 87%.

Solution: Implemented smart auto-categorization by topic, automatic CEFR tagging, and powerful search/filter. Added "study now" queue prioritizing forgotten words. Daily vocabulary review usage increased 340%.

Solution: Reframed scoring as "similarity to native speaker" with encouraging language. Added "practice mode" (no scoring, just feedback). Showed progress over time vs. absolute scores. Feature adoption increased from 59% to 84%.

96%

Task completion rate (final)

19min

Avg. practice session length

4.7/5

System Usability Scale (SUS)

8%

Error rate (down from 34%)